Managed Kubernetes

The future-oriented container orchestration

Containers in IT - the best-known representatives are the Kubernetes containers of the Google open source platform. They offer a solution to a problem that is all too common in IT operations: running software reliably and in a standardised way - regardless of the deployment target. Before containers, virtual machines (VMs) were the primary method used to run many isolated applications on a single server. VMs require a hypervisor host that abstracts resources.

Containers, however, do not need their own operating system. The container engine provides access to the kernel of the host operating system (host OS). Independent microservices are made available in the containers. Each individual service can be set up, implemented, scaled and renewed separately. Containers are therefore urgently needed for digital transformation and are faster, more portable and easier to update than VMs.

Advantages of using containers?

-

Speed:

Unlike virtual machines, which usually take several minutes to start up, containers do not need to wait for the operating system to start and are ready within seconds.

-

DevOps:

The speed, narrow footprint and resource efficiency make containers ideal for use in automated development environments and enable optimization of the CI/CD pipeline.

-

Availability

Containers package only the app and its dependencies. This enables easy operation on a wide variety of platforms.

-

Scalability: Containers are usually small as they require no separate operating system, such as virtual machines, and thus scale arbitrarily.

-

Consistency: Because containers hold all dependencies and configurations internally, they ensure that developers can work in a consistent environment, regardless of where the containers are deployed.

Google's open source Kubernetes offering

K8s explained simply - Kubernetes infrastructure

Kubernetes (abbreviated k8s) is an orchestration software. It allows software developers and IT administrators to organize, manage, monitor and automate the deployment, scaling, operation and administration of container environments. This management environment coordinates the computer, network and storage infrastructure. All of this is done on behalf of user workloads.

K8s is also a portable, extensible open-source platform and has a large, rapidly growing ecosystem. Kubernetes services, support and tools are widely available. Various IT infrastructures can be used, e.g. local hosters, AWS or Azure. In 2014, Google made the Kubernetes project freely available to everyone after the tech giant gained over a decade of experience running workloads in large-scale production. So container cluster management becomes a necessity especially for more complex projects with a large number of containers distributed across multiple servers or virtual machines.

Insights for Google Kubernetes Architecture

Pods: Pods are the smallest unit that can be deployed with Kubernetes. Each Pod encapsulates one or more containers, with one pod usually housing one container running one application or microservice. If pods contain multiple containers, however, it is often because they need to access the same resources. These containers the share the host, storage, and network within the pod.

Node and Cluster: Pods run on nodes, i.e. virtual or physical machines, which in turn are grouped into clusters. Each node has the services needed to run pods. A cluster has control units called Kubernetes Masters, which are responsible for managing the nodes.

Project Borg and the Kubernetes story: Containerized workloads have been around for quite some time. For over a decade, Google has been using them in their internal production. Whether it's service jobs like web front-ends, infrastructure systems like Bigtable, or batch frameworks like MapReduce - Google mostly uses container technology. Whether it's service jobs like web front-ends, infrastructure systems like Bigtable, or batch frameworks like MapReduce - Google mostly uses container technology. Today, we know that Kubernetes is directly based on Borg, and many of the Google developers working on Kubernetes were previously involved in Borg. Four central Kubernetes functions have emerged from the project: Pods, Services, Labels, and IP-per-Pods. By releasing the functionalities of the cluster manager, not only open-source projects within Kubernetes were created, but also publicly accessible hosted services via the Google Container Engine. Google is returning Kubernetes to the community "CNCF" and at the same time, wants to advance container technology through swarm intelligence.

Managed Kubernetes by Medialine

Why is Kubernetes from Google not enough?

Although Kubernetes simplifies the handling of a larger container and computer landscape, successful use requires in-depth knowledge of Kubernetes technologies. The implementation and maintenance, the permanent updating and monitoring are often very time-consuming and complex.

With a container-centric management environment like the one provided by Medialine, computing, networking and storage infrastructure are coordinated in a simplified way. This offers much of the simplicity of Platform as a Service (PaaS) coupled with the flexibility of Infrastructure as a Service (IaaS). In order to operate Kubernetes productively and efficiently, competent management is imperative.

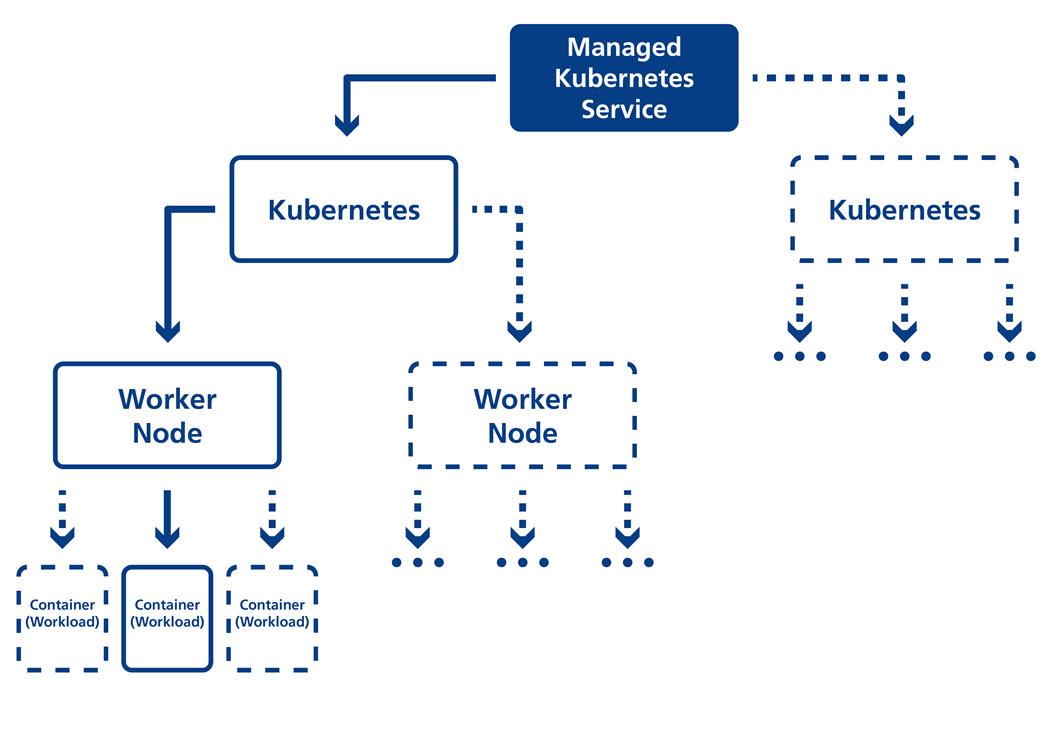

How to manage your containers

With Managed Kubernetes on VMware Tanzu, Medialine enables a fully automated setup of Kubernetes clusters. For example, multiple clusters for test environments can be set up easily in the blink of an eye and deleted again if necessary. Thus, we offer you a Kubernetes setup as simple and compatible as possible.

High-performance workloads through auto-scaling

Unified platform for containers and VMs

Integrated Persistent Storage on a highly available and scalable platform

German data center and EU-DSGVO data protection

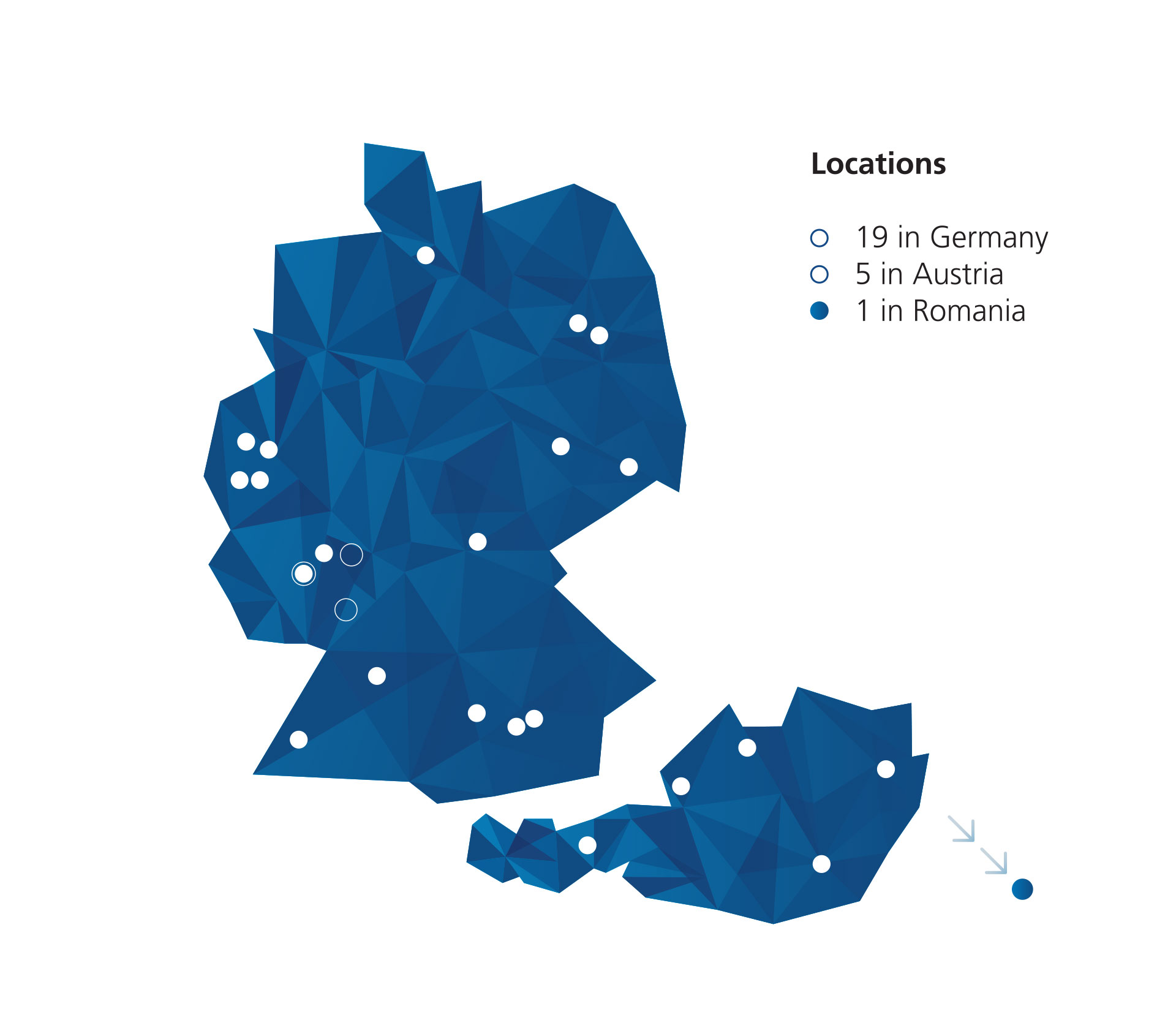

Geo-redundant data center setup across multiple locations on request

Relief for your DevOps teams

Flexible and customized

Support with dedicated contacts

Our unique selling point: The CompanyCloud

The managed Kubernetes service can be easily operated via the CompanyCloud self-service portal. In addition, own systems can be easily integrated with SaaS applications from the CompanyCloud.

We bridge the gap for you between legacy VMware infrastructures and containerized workloads. With cutting-edge technologies, we provide true multi-tenancy, allowing you to intelligently integrate and view resources that you host in our CompanyCloud, onPrem, or with a hyperscaler, in our Kubernetes structure. You'll not only receive a standalone, dedicated Kubernetes cluster instead of a shared cluster structure, but you'll also be able to view and configure all your Kubernetes clusters, consumed resources, memory, and storage independently through our self-service portal. You can freely choose CPU per node, CPU type, RAM per node, storage size per node, storage type, and storage performance class for both worker and master nodes, meaning you can tailor all of the properties of your Kubernetes cluster to meet your specific needs.

Kubernetes Monitoring and Kubernetes Hosting with VMware Tanzu

Tanzu is a product of the VMware family and integrates seamlessly into the entire ecosystem of the market leader. Cross-integration and adaptation from both the team and the customer side is no issue which means that the classic VMware infrastructures can still be used. Tanzu makes it possible to run Kubernetes on vSphere, i.e. natively in the operating system of the hypervisor. By that, VMware Tanzu can be used as a Kubernetes as a Service (KaaS) offering.

Ergo: The familiar admin interface is also available for the new container environment. The administrator relieves developers of the tedious task of configuring and maintaining Kubernetes. This allows you to concentrate on producing good code. Tanzu includes a whole portfolio of products and services, some developed by VMware itself, some coming from acquisitions. With them, companies can control their applications themselves from development (Build) to management (Manage) to operation (Run).

Tanzu offers:

-

Consolidation, standardization and optimization of containers and VMs.

-

Modernization of traditional apps

-

Development of higher quality software

-

Faster release cycles through accelerated processes

This product is particularly interesting for all those for whom working with Kubernetes is part of their everyday work. Wherever developments take place and software products are created by in-house developers, Tanzu will contribute to simplifying and optimizing workflows. Medium-sized companies that want to relieve their developers of the infrastructure development part should rely on Kubernetes containers.

Q&A

After virtual machines (VMs) were initially a big leap in the further development of processes, containers now represent the next paradigm shift. Containers make it possible to separate applications from their runtime environment. They do not require their own operating system, but use the system on which they are installed.

Virtualization thus takes place without a hypervisor. Several containers isolated from each other can be operated within one operating system installation. Containers contain both the required operating system components and applications. Individually executable, independent microservices are made available in the containers. Each individual service can be set up, implemented, scaled and renewed separately.

Containers can be provided, started and stopped very quickly. If more capacity is needed for a service, new containers are made available. Conversely, containers can be deleted immediately when they are no longer needed.

The provisioning, scaling, load balancing and networking of containers is complex and time-consuming. Container orchestration automates these processes and also offers many options for connecting further interfaces and central functions.

Kubernetes (abbreviation K8s) is an open source orchestration software provided by Google since 2014. It enables software developers and IT administrators to organize, manage, monitor and automate the deployment, scaling, operation and administration of container environments.

Managed Kubernetes is usually offered as a managed service or Kubernetes as a Service and made available on a separate cloud platform. This also includes the deployment, administration and updating of the Kubernetes container infrastructure as well as the operation of the system environment (nodes).

This means that developers only have to take care of the applications themselves, which run in the nodes. This saves costs and internal resources - making it easier to concentrate on the core business.

Docker is an entire tech stack, only a part of which is "containerd". This part embodies its own high-level container runtime. In terms of Kubernetes clusters, Docker is used to retrieve and run container images using the container runtime. Docker is a popular choice for this runtime (other common options are containerd and CRI-O). However, Docker was not designed to be embedded in Kubernetes. This leads to problems.

Containers are packed into so-called Docker images and maintained and made accessible in an image registry, such as Harbor. The Kubernetes nodes in turn hold a container engine (runtime) to execute the containers (see figure).

Since the end of 2020 Docker can no longer be used as a container runtime (engine) in Kubernetes, as the latest update of Kubernetes has excluded this choice. Because of that Managed Kubernetes services like GKE, EKS, or AKS will no longer work for worker nodes in the future. So if your service includes customizations for your nodes, you may need to update them based on your environment and runtime requirements.

Yes, definitely. Kubernetes has given the green light on its blog. Docker images are still supported because they are compatible with OCI (OCI = Open Container Initiative), i.e. they are OCI-compliant. This means your Docker images can still be operated in Kubernetes. The reason for this is as follows: The image that Docker creates is not directly a Docker-specific image, but an OCI image. And every OCI-compliant image, regardless of which tool is used to create it, looks the same to Kubernetes. Both containerd (i.e. part of Docker) and CRI-O can retrieve and run these images.

Docker-Images

Want to know more about Containers, Dockers and Tanzu? Request your personal consultation!